Building an AI Translation App With NextJS by Extracting JSON From OpenAI's API

Large language models (LLMs) such as OpenAI's GPT or Meta's LLaMA allow developers and everyday users...

Large language models (LLMs) such as OpenAI's GPT or Meta's LLaMA allow developers and everyday users to receive useful information, such as code, advice, or factual information by prompting the model using plain language. Realizing the value of such models, many developers have begun working closely with LLMs to enhance their development experience. Indeed, the notion of AI pair programming is no longer science fiction, with AI tools such as Github's Co-Pilot being adopted for daily work by 92 percent of developers according to one survey .

One constraint with LLMs is that they rely on plain language as both input and output by design. While this is very useful for the average person interacting with chat bots such as ChatGPT, there are certain use cases where having a LLM consistently respond in a very particular format, such as XML or JSON, is preferred. To this end, the field of "prompt engineering" has emerged, where individuals seek to fine tune the content which LLMs respond with, for example to consistently retrieve JSON formatted text from a LLM for use in a web application. Prompt engineering and additional fine-tuning measures can increase the probability that the developer will receive a response from the model in the format they desire, but this approach is inherently inconsistent and feels shoddy or "hacky".

Addressing this constraint, Open AI recently released a function calling feature for its API. This feature allows developers to request JSON data that conforms to a specific schema from the GPT3.5 and GPT4 models. In this tutorial, we are going to exploit OpenAI's new function calling feature to build a simple web application that translates a user's English sentence to another language while identifying and translating nouns, verbs, and those verbs' conjugations. In this tutorial, we will be translating English to Spanish, but I encourage you to try to follow along with translating a different pair of languages if you're interested!

Purpose

This tutorial is designed to teach you concepts about front end web development using technologies such as React, NextJS and TailwindCSS. It will also serve as an introduction to using the OpenAI API to enhance the functionality of your projects on the web. In particular, you can expect to learn to about how to use OpenAI's function calling feature to retrieve JSON formatted text from a LLM.

Approach

We are going to approach building this application using NextJS and Typescript. For this reason, it is important that you are familiar with basic principles of both React development and Typescript.

Querying OpenAI

NextJS supports API routes, allowing us to build public APIs directly in our NextJS project. We will create a single API endpoint that is responsible for sending a request to the GPT3.5 LLM through OpenAI's API and awaiting a response that will contain the JSON we use in our translation application.

Managing State

We will maintain global state for the JSON object representing our translated English sentence using Zustand. Zustand is a state management library for React applications that provides a simple and flexible API to manage and share state across components.

Styling

We will scaffold our NextJS project with TailwindCSS.

Tailwind CSS is a utility-first CSS framework that simplifies styling in web development by providing low-level utility classes for building designs directly in your markup. We will also be using DaisyUI which is a component library built on TailwindCSS.

Step One: Create a NextJS Project

Let's start building this application. If you don't already have node and an appropriate package manager like npm installed, see here . In this tutorial we will be using npm to manage our dependencies. NextJS maintains a CLI to scaffold a new NextJS project. In a terminal or command shell, navigate to a suitable directory to store your project and enter the following:

npx create-next-app@latest

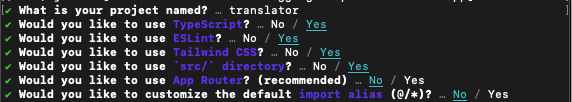

The create-next-app program will prompt you for several options when scaffolding your project. Name your project

something (we named ours lang-ai-next) then select Yes when asked whether to use Typescript, ESLint, TailwindCSS, and the default /src directory. We will be using NextJS's original Pages Router rather than the newer App Router introduced in Version 13 of NextJS. See more about that here . Your completed prompts should look something like this,

Hit enter and create-next-app will generate a new NextJS project for you. Open the project in your favourite code editor and you should find the project structure looks something like this:

|-- node_modules

|-- public

|-- src

| |-- pages

| |-- api

| |-- hello.ts

| |-- _app.tsx

| |-- _document.tsx

| |-- _index.tsx

| |-- styles

| |-- globals.css

|-- .eslintrc.json

|-- .gitignore

|-- next-env.d.ts

|-- next.config.js

|-- package-lock.json

|-- package.json

|-- postcss.config.js

|-- README.md

|-- tailwind.config.js

|-- tsconfig.json

Let's install the dependencies needed for this project. Run this command to install DaisyUI as a dev dependency:

npm i -D daisyui@latest

Next run the following command to install other dependencies, which we will describe as they are implemented:

npm i ajv axios openai zustand

Step Two: Creating Our API

We will use NextJS's built in API router to create an endpoint that posts a prompt to the OpenAI API and returns JSON representing our translated sentence. Under /src/pages/api , create new file called translator.ts . This file will return a handler function that takes HTTP request and response objects as parameters. Let's import the Typescript types and packages we need to build the endpoint.

//translator.ts

import type { NextApiRequest, NextApiResponse } from "next";

import OpenAI from "openai";

import Ajv from "ajv";

const ajv = new Ajv();

Here he have imported the OpenAI class which will be used to create an object that interfaces with the OpenAI API. We also imported ajv which is JSON-validation package that we will use to validate the format of JSON returned to us through the OpenAI API. In addition, we imported type definitions for the request and response objects used as parameters for our NextJS endpoint handler function.

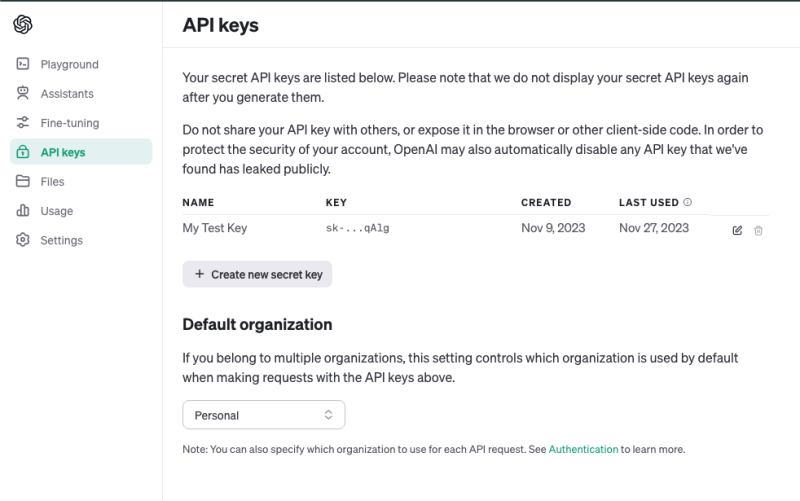

Now let's create an instance of the OpenAI class and supply it an OpenAI API key to communicate with the LLM.Note: OpenAI's API is not free but it is very inexpensive, especially when using the GPT3.5 model. Navigate to the OpenAI API site, create an account if you don't already have one and login to the dashboard . You will need to supply a payment method in order to obtain an API key. You can create and find your API keys using the menu on the left of the dashboard:

NextJS natively supports reading environmental variables from a .env.local file in the root directory of your project. Go ahead and create that file with the following, replacing your YOUR_KEY_HERE with the API key you obtained from OpenAI's dashboard,

//.env.local

API_KEY=[YOUR_KEY_HERE]

Now, we can update our translator API to include a new OpenAI object configured with our API key using the environmental variable we defined,

//translator.ts

import type { NextApiRequest, NextApiResponse } from "next";

import OpenAI from "openai";

import Ajv from "ajv";

const ajv = new Ajv();

const openai = new OpenAI({

apiKey: process.env.API_KEY,

});

Next, let's define a JSON schema that describes the shape of JSON we expect OpenAI API to return to us when we prompt it with an English sentence. We can use the description property with the schema to supply additional information to the model about what it should look for when creating the JSON text. Let's first declare the schema as a Javascript object and add properties to the schema, flagging them as required so that the model always produces them in the final object,

const translationSchema = {

type: "object",

description:

"A schema analyzing the verb and noun contents of a prompted Spanish sentence. Contains the infinitive forms of each verb in the sentence and its conjugations in the present tense. Contains a translation of the sentence in English and the English translations of each noun in the sentence.",

properties: {

sentence: {

type: "string",

description: "A sentence to analyze.",

},

translatedSentence: {

type: "string",

description: "The English translation of the sentence to be analyzed.",

},

nouns: {

type: "array",

description:

"An array of nouns to be analyzed. These are to be identified from the prompt sentence",

items: {

type: "object",

properties: {

noun: {

type: "string",

description: "The Spanish noun to be translated",

},

translation: {

type: "string",

description: "The English translation of the noun",

},

},

required: ["noun", "translation"],

},

},

verbs: {

type: "array",

description:

"An array of verbs to be analyzed. These are to be identified from the prompt sentence",

items: {

type: "object",

description:

"Each item is a verb to be analyzed from the prompt with an infinitive form and conjugations in the present tense.",

properties: {

infinitive: {

type: "string",

description: "The infinitive form of the verb in Spanish.",

},

translation: {

type: "string",

description:

"The English translation of the verb with 'to' in front of it. Example: 'to be'",

},

conjugations: {

type: "object",

properties: {

present: {

type: "object",

description:

"The conjugations of the verb in the present tense",

properties: {

yo: { type: "string" },

tu: { type: "string" },

el: { type: "string" },

nosotros: { type: "string" },

vosotros: { type: "string" },

ellos: { type: "string" },

},

required: ["yo", "tu", "el", "nosotros", "vosotros", "ellos"],

},

},

required: ["present"],

},

},

required: ["infinitive", "translation", "conjugations"],

},

},

},

required: ["sentence", "translatedSentence", "nouns", "verbs"],

};

We included four main properties in the schema. The sentence and translatedSentence properties contain the English and translated Spanish sentence prompted by the user to the model. The nouns property contains an array of translated nouns identified in the English sentence by the AI and the verbs property similarly contains translations of each verbs as well as the verb in its infinitive and present tense forms. Notice we provide description properties for each object. This is what notifies the AI about what content it should use to fill out the schema.

Next, let's create Typescript types that describe the shape of the shape of the Javascript object we will obtain after parsing the JSON response from the model. Create a new types folder at /src/types and add a translationTypes.ts file to it with the following types,

//translationTypes.ts

export interface NounObject {

noun: string;

translation: string;

}

export interface ConjugationPronounsObject {

yo: string;

tu: string;

el: string;

nosotros: string;

vosotros: string;

ellos: string;

}

//feel free to change the language you are translating to or add more verb tenses

export interface VerbConjugationsObject {

present: ConjugationPronounsObject;

}

export interface VerbObject {

infinitive: string;

translation: string;

conjugations: VerbConjugationsObject;

}

export interface TranslationObject {

sentence: string;

translatedSentence: string;

nouns: NounObject[];

verbs: VerbObject[];

}

export interface TranslationResponseObject {

success: boolean;

translation: TranslationObject | null;

}

The TranslationObject mirrors the JSON schema we expect to receive from the call to the OpenAI API with our sentence to translate. The TranslationResponseObject includes a flag for whether the translation was a success.

Let's go back to our translator.ts endpoint and implement the types. First import them into the endpoint file,

//translator.ts

import type { NextApiRequest, NextApiResponse } from "next";

import type{

TranslationResponseObject,

TranslationObject,

} from "@/types/translationTypes";

import OpenAI from "openai";

import Ajv from "ajv";

const ajv = new Ajv();

...

Next, let's create a validation function using ajv using our JSON schema as the argument. We will also setup our API handler function, making the endpoint public to our client code,

//translator.ts

...

const validate = ajv.compile(translationSchema);

export default async function handler(

req: NextApiRequest,

res: NextApiResponse

) {

const prompt = req.body.prompt;

}

So far, our endpoint handler function just extracts the prompt property from the client's request body JSON and stores it in a local variable. We want to use that prompt as input for our call to the OpenAI API so that the AI model can produce JSON following our translationSchema,

//translator.ts

...

const validate = ajv.compile(translationSchema);

export default async function handler(

req: NextApiRequest,

res: NextApiResponse

) {

const prompt = req.body.prompt;

const completion = await openai.chat.completions.create({

model: "gpt-3.5-turbo-1106",

response_format: { type: "json_object" },

messages: [

{

role: "system",

content: "You are a helpful assistant that returns JSON.",

},

{

role: "user",

content: prompt,

},

],

functions: [

{

name: "find_verbs",

description:

"Find all the verbs in a Spanish sentence and return their conjugations in the present, imperfect past, and preterite tenses according to the schema provided.",

parameters: translationSchema,

},

],

function_call: { name: "find_verbs" },

temperature: 0,

});

}

Here we await a call to the OpenAI API. We are opening a request for a chat completion from the GPT3.5 turbo model and saving its response in a variable called completion . The create function's arguments object accepts an array of messages that describe the "conversation" we are interested in having with the model. We supply a system message to the model and wemustindicate to the model that it returns JSON. Our user message simply includes our prompt which will be an English sentence we want to translate. Next we supply a functions property to the argument object and define a new function translate . We describe the function using the description property and then supply our JSON schema as its parameters . This will ensure the model provides JSON matching the schema when the function call is invoked. Notice we explicitly request the model to invoke our translate function here: function_call: { name: "translate" } . We set a temperature of 0 to the API call which reduces randomness of returned content.

Next, lets add some error handling to the API handler function and an actual response to the client when the endpoint is hit. Under the completion variable, insert the following code,

//translator.ts

...

export default async function handler(

req: NextApiRequest,

res: NextApiResponse

) {

...

try {

const functionCall = completion.choices[0].message.function_call;

if (functionCall) {

const argumentsJSON: TranslationObject = JSON.parse(

functionCall?.arguments

);

// Validate the arguments

const isValid = validate(argumentsJSON);

if (!isValid) {

return res.status(500).json({ success: false });

}

// Create a new translation object

const translationObject: TranslationResponseObject = {

success: true,

translation: argumentsJSON,

};

return res.status(200).json(translationObject);

}

return res.status(500).json({ success: false });

} catch (error) {

throw error;

}

}

First we extract the returned function call JSON text from the OpenAI API response, check it exists, and then parse the arguments property from it into a Javascript object. Notice we coerce the object to be a TranslationObject type, which we defined above. Next we validate the returned parsed JSON against our schema using ajv . If the returned JSON is invalid we respond with an error code and no translation. If the JSON is valid we create a new TranslationResponseObject and respond to our client with it, flagging the translation as a success.

Step Three: Building Our Front End

Our last step is to build out a front end application that consumes the the translation API we built. We will be using DaisyUI's TailwindCSS classes to manage our styles. Let's setup DaisyUI now.

Creating a Layout Wrapper

If you have been following along, you should already have DaisyUI installed a dependency in your project but if not, run npm i -D daisyui@latest to install it as a dev dependency. Next we need to open our tailwind.config.ts file in the root directory of the project and add DaisyUI as a plugin,

//tailwind.config.ts

import type { Config } from "tailwindcss";

const config: Config = {

content: [

"./src/pages/**/*.",

"./src/components/**/*.",

"./src/app/**/*.",

],

plugins: [require("daisyui")],

};

export default config;

With DaisyUI installed, start by creating a /components directory under /src . We will store our React components for use in our NextJS frontend here. Let's create a simple layout to wrap our application using components from DaisyUI. Create a /layout directory under /src/components and add the following three files: Header.tsx , Footer.tsx , and Layout.tsx ,

//Header.tsx

function Header() {

return (

<div className="navbar bg-base-100">

<a className="btn btn-ghost text-xl">AI Spanish Translator</a>

</div>

);

}

export default Header;

//Footer.tsx

function Footer() {

return (

<footer className="footer footer-center p-4 bg-base-300 text-base-content">

<aside>

<p>Copyright © 2023 - All right reserved by ACME Industries Ltd</p>

</aside>

</footer>

);

}

export default Footer;

//Layout.tsx

import Header from "./Header";

import Footer from "./Footer";

//props typing

interface Props {

children: React.ReactNode;

}

export default function Layout({ children }: Props) {

return (

<div className="h-screen flex flex-col">

<Header />

<main className="flex-grow"></main>

<Footer />

</div>

);

}

Notice that Layout accepts a children prop which is typed as a ReactNode. This means that any component we wrap with our Layout component will be wrapped with our Header component above it and our Footer component below. Let's use our Layout in the application next. Locate _app.tsx under the /src/pages directory. In a NextJS application, this component is responsible for rendering any component we mount at a given route. Let's modify it to use our Layout component,

//_app.tsx

import "@/styles/globals.css";

import type { AppProps } from "next/app";

//import components

import Layout from "@/components/layout/Layout";

export default function App({ Component, pageProps }: AppProps) {

return (

<Layout>

<Component {...pageProps} />

</Layout>

);

}

Now let's remove the boilerplate from when we scaffolded the project using create-next-app . Navigate to /src/pages/index.tsx . This file is our main entry point and the default component to mount when we launch our NextJS application's development server. Replace the code with the following,

//index.tsx

export default function Home() {

return <div>hello world!</div>;

}

Next navigate to /src/styles/globals.css and remove everything except for the TailwindCSS declarations at the top,

//globals.css

@tailwind base;

@tailwind components;

@tailwind utilities;

Let's try running our web application using the development server. In a terminal, navigate to your project directory. Then run the following command, npm run dev . This command should start the NextJS development server and if you navigate to http://localhost:3000/ in a web browser, you should see the following:

Creating Our Global State Store

We will use Zustand to create a global state store that can share the object representing our translated sentence and across our application. If you didn't install Zustand and axios above, do so now with npm i zustand axios . Under /src create a new directory called /stores . In /stores create a new file called translationStore.tsx . We will put our Zustand store here. Let's import Zustand's create function and define a Typescript type that describes what sort of state and functionality our store should have,

//translationStore.tsx

import { create } from "zustand";

import type { TranslationObject } from "@/types/translationTypes";

interface TranslationStore {

translation: TranslationObject | null;

setTranslation: (translation: TranslationObject | null) => void;

translationIsLoading: boolean;

setTranslationIsLoading: (isLoading: boolean) => void;

}

Our TranslationStore interface defines a Zustand store as an object. It has a getter and setter for a translation property which will either be equal to a TranslationObject (matching the schema we enforce on the JSON returned through the OpenAI API) or null meaning no translation object exists. We also define as setter and getting for whether a translation is currently loading.

Let's create the actual Zustand store next,

//translationStore.tsx

...

const useTranslationStore = create<TranslationStore>()((set) => ({

translation: null,

setTranslation(translation) {

set({ translation });

},

translationIsLoading: false,

setTranslationIsLoading(isLoading) {

set({ translationIsLoading: isLoading });

},

}));

export default useTranslationStore;

Zustand supports a React hook based API. We export our useTranslationStore hook and can use it to access the state we have defined anywhere in our application.

Getting User Input

Next, let's define a component that accepts an English sentence from a user to be submitted through translator endpoint to the OpenAI API.

Under /src/components/ create a new directory called prompt-box and add a PromptBox.tsx file to it with the following code,

//PromptBox.tsx

function PromptBox() {

return (

<div className="w-full flex flex-col space-y-4">

<h3 className="text-xl font-semibold">

Enter English text to translate it to Spanish:

</h3>

<div className="flex space-x-4">

<textarea

className="textarea textarea-accent textarea-lg w-full"

placeholder="type here"

/>

<button className="btn btn-success">Translate</button>

</div>

</div>

);

}

export default PromptBox;

This file defines a functional component called PromptBox that will allow a user to input an English sentence to be translated to Spanish. After the use types their English sentence into the textarea field, we want to submit it to our translator API when the user clicks the button. Let's set up that functionality. First let's import what we need above the functional component,

//PromptBox.tsx

import { useState } from "react";

import axios from "axios";

import type { TranslationResponseObject } from "@/types/translationTypes";

import useTranslationStore from "@/stores/translationStore";

...

Next, let's define a POST request to our translator API using axios ,

//PromptBox.tsx

import { useState } from "react";

import axios from "axios";

import type { TranslationResponseObject } from "@/types/translationTypes";

import useTranslationStore from "@/stores/translationStore";

// create an axios get request to the api

const getTranslation = async (prompt: string) => {

const response = await axios.post<TranslationResponseObject>(

"/api/translator",

{ prompt: prompt }

);

return response.data;

};

...

getTranslation will create a POST request to our translator API and return a Promise that is typed as our TranslationResponseObject . We can use this function to set state in our client's Zustand store,

//PromptBox.tsx

...

function PromptBox() {

//store selector

const [setTranslation, setTranslationIsLoading] = useTranslationStore(

(state) => [state.setTranslation, state.setTranslationIsLoading]

);

//component state

const [prompt, setPrompt] = useState<string>("");

const handleSubmit = async () => {

if (prompt === "") return;

setTranslationIsLoading(true);

const response = await getTranslation(prompt);

setTranslationIsLoading(false);

if (response.success) {

setTranslation(response.translation);

}

};

return (

<div className="w-full flex flex-col space-y-4">

<h3 className="text-xl font-semibold">

Enter English text to translate it to Spanish:

</h3>

<div className="flex space-x-4">

<textarea

className="textarea textarea-accent textarea-lg w-full"

placeholder="type here"

value=

onChange={(e) => setPrompt(e.target.value)}

/>

<button onClick= className="btn btn-success">

Translate

</button>

</div>

</div>

);

}

export default PromptBox;

First we grab the setTranslation and setTranslationIsLoading function from our Zustand store. Next we define some state for the text input our user will write into the textarea in our component. Then we define a handleSubmit function which will run when our button is clicked, set our translationIsLoading global state to true, await a response with a TranslationResponseObject from our translator API and then set our translation global state to the response if it's a success.

Let's add PromptBox to our application. Head back to index.tsx and modify it as follows,

//import stores

import useTranslationStore from "@/stores/translationStore";

//import components

import PromptBox from "@/components/prompt-box/PromptBox";

export default function Home() {

const [translation, translationIsLoading] = useTranslationStore((state) => [

state.translation,

state.translationIsLoading,

]);

return (

<div className="w-full h-full px-4 py-4 flex flex-col space-y-4 bg-neutral">

{!translationIsLoading && !translation && <PromptBox />}

</div>

);

}

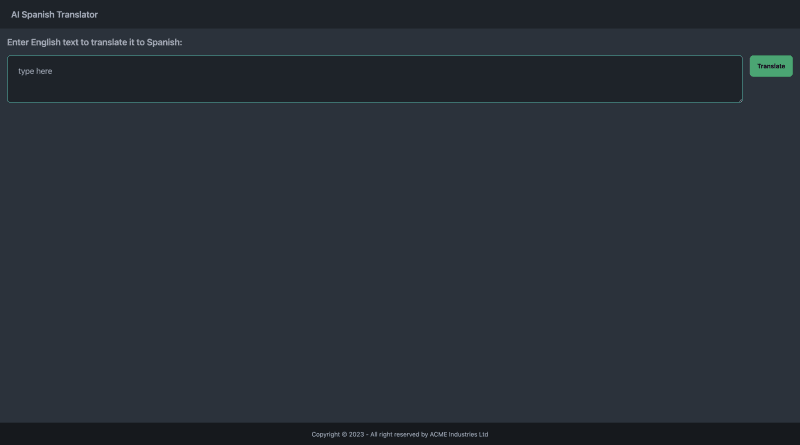

Here we get the translation and translationIsLoading state from our Zustand store and conditionally render PromptBox only if both are null. If a translation is loading, we show a loading spinner instead. Your application should now look like this:

Creating Display Components For Our Translation

Let's create the final components for the application that display the content from the TranslationObject generated by the AI.

Create a translation-components directory under /src/components and add a directory called /sentence to it. Then create Sentence.tsx under src/components/translation-components/sentence/ with the following,

//Sentence.tsx

//import stores

import useTranslationStore from "@/stores/translationStore";

function Sentence() {

// store selector

const [translation, setTranslation] = useTranslationStore((state) => [

state.translation,

state.setTranslation,

]);

return (

<div className="card w-full bg-base-300 shadow-xl">

<div className="card-body">

<div className="card-actions justify-end">

<button

onClick={() => setTranslation(null)}

className="btn btn-primary btn-sm"

>

Translate something else

</button>

</div>

<p className="text-xl">English: </p>

<p className="text-xl">

Translation:

</p>

</div>

</div>

);

}

export default Sentence;

This component grabs the translation global state from our store and displays the original sentence and the translated sentence. It also has a button which sets translation to null in our store which will allow us to enter a new sentence.

Next create a directory, /src/components/translation-components/nouns . We are going to use DaisyUI's "card" component class to create a display card for each noun identified by the AI in the translated sentence. Create NounCard.tsx under the newly created /nouns directory with,

//NounCard.tsx

import type { NounObject } from "@/types/translationTypes";

//props typing

interface Props {

noun: NounObject;

}

function NounCard({ noun }: Props) {

return (

<div className="card w-full bg-base-100 shadow-xl">

<div className="card-body">

<h2 className="card-title"></h2>

<p>Translation: </p>

</div>

</div>

);

}

export default NounCard;

This components accepts a NounObject which we defined earlier in our types file. It renders a card that contains the Spanish noun and its translation. We will need a parent component responsible for rendering all of the NounCard components for each noun in the sentence. Create Nouns.tsx in the same directory with the following code,

//Nouns.tsx

//import stores

import useTranslationStore from "@/stores/translationStore";

//import components

import NounCard from "./NounCard";

function Nouns() {

// store selector

const [translation] = useTranslationStore((state) => [state.translation]);

if (!translation?.nouns) return null;

return (

<div className="flex flex-col space-y-4">

<h3 className="text-lg">Nouns:</h3>

<div className="grid grid-cols-3 gap-4">

key= />

))}

</div>

</div>

);

}

export default Nouns;

Nouns checks if the .nouns property exists on our translation global state and then returns a grid of NounCard components with for each noun identified by the AI. Let's set up something simila for verbs in the sentence. Create a src/components/translation-components/verbs/ directory with two components.

VerbCard.tsx :

//VerbCard.tsx

//import types

import type { VerbObject } from "@/types/translationTypes";

//props typing

interface Props {

verb: VerbObject;

}

function VerbCard({ verb }: Props) {

return (

<div className="card w-full bg-base-100 shadow-xl px-4 py-4">

<div className="card-body">

<h2 className="card-title">

-

</h2>

</div>

{/* present conjugations */}

<div className="overflow-x-auto">

<table className="table">

{/* head */}

<thead>

<tr>

<th>Tense</th>

<th>Yo</th>

<th>Tú</th>

<th>Él/Ella</th>

<th>Nosotros</th>

<th>Vosotros</th>

<th>Ellos/Ellas</th>

</tr>

</thead>

<tbody>

<tr>

<th>Present</th>

<td></td>

<td></td>

<td></td>

<td></td>

<td></td>

<td></td>

</tr>

</tbody>

</table>

</div>

</div>

);

}

export default VerbCard;

The VerbCard component serves a similar function as our NounCard component except it also produces a table containing the present tense conjugations of the Spanish verb identified by the AI.

Verbs.tsx :

//Verbs.tsx

//import stores

import useTranslationStore from "@/stores/translationStore";

//import components

import VerbCard from "./VerbCard";

function Verbs() {

// store selector

const [translation] = useTranslationStore((state) => [state.translation]);

if (!translation?.verbs) return null;

return (

<div className="flex flex-col space-y-4">

<h3 className="text-lg">Verbs:</h3>

<div className="flex flex-col space-y-4">

key= />

))}

</div>

</div>

);

}

export default Verbs;

The Verbs component similarly renders a grid of VerbCard components using our TranslationObject's verbs property.

Wrapping Up

We now have all the pieces in place to finish our simple application! Let's incorporate the Sentence , Nouns and Verbs components.

Let's import those components into our index.tsx file and render them whenever a translation exists in our Zustand store. Here is what your final index.tsx file should look like,

//index.tsx

//import stores

import useTranslationStore from "@/stores/translationStore";

//import components

import PromptBox from "@/components/prompt-box/PromptBox";

import Nouns from "@/components/translation-components/nouns/Nouns";

import Sentence from "@/components/translation-components/sentence/Sentence";

import Verbs from "@/components/translation-components/verbs/Verbs";

export default function Home() {

const [translation, translationIsLoading] = useTranslationStore((state) => [

state.translation,

state.translationIsLoading,

]);

return (

<div className="w-full h-full px-4 py-4 flex flex-col space-y-4 bg-neutral">

{!translationIsLoading && !translation && <PromptBox />}

</div>

);

}

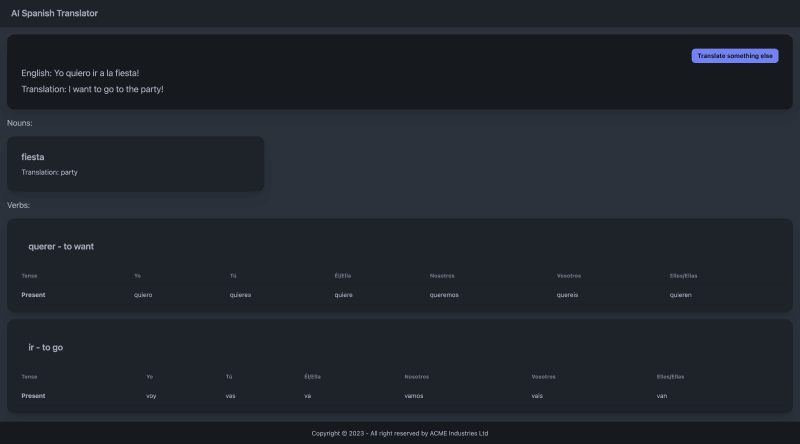

And this is the result after inputting an English sentence into the translator app:

Closing Thoughts

OpenAI's function calling feature represents a breakthrough in how developers can integrate LLM functionality into their projects on the web. We have demonstrated a basic application that taps into this feature in order to translate plain language into strictly formatted JSON code. If you followed along with the tutorial the whole way through, congratulations! As mentioned above, I encourage you to experiment with either translating another language, extracting different elements of a language or creating something entirely different.